So what exactly is Big Data?

Everyone is talking about Big Data, and how it can transform our lives in areas as diverse as fighting crime to detecting brain tumours. But even though the term “Big Data” first emerged into public consciousness nearly ten years ago, there is still confusion about what it actually means, particularly in the business community. Only 37% of senior managers in medium sized companies said they would be confident about explaining the term to someone else.

So here’s a guide to Big Data and the background behind it.

Isn’t Big Data just large amounts of data?

In 1956, IBM launched the snappily named RAMAC 305 (RAMAC stood for “Random Access Method of Accounting and Control”). This was the world’s first commercial computer to contain a magnetic disk drive and could store up to 5MB of data – an astonishing amount of data storage at the time, but equivalent to one MP3 track today. Just over 60 years later, a very ordinary home or office computer could have the capacity to hold as 1 Terrabyte’s worth of data, giving it 200,000 times the storage capabilities of the RAMAC 305.

Without this dramatic increase in the ability to store information, Big Data could not exist. Over 2.5 quintillion bytes of data are created every single day. Over half the world’s population now has access to the internet; Google will process 3.5 billion searches every day, every minute of every day 156 million emails are sent. 90% of the data that has ever been created, was produced within the last two years.

The increase in the amount of data created will only accelerate - we are now seeing the advent of the Internet of Things, where devices in our houses, our cars and gadgets worn on our bodies can connect to each other and to the internet. These devices pump out a steady stream of data which can be analysed with the aim of increasing efficiency or generating alerts when action needs to be taken and which just adds to the data created directly by human beings.

Big Data, is indeed... big!

Different types of data

However, size isn’t the only thing that defines Big Data.

The first large datasets were gathered by governments, usually with the aim of working out how much tax they could collect. The Domesday Day book, completed in 1086 was an early example of this. Births and deaths were also recorded by governments and in the mid-19th century a doctor called John Snow carried out an analysis of death certificates during a cholera epidemic in London. Using an early data visualisation, he worked out that there was an unusually high number of deaths around a particular water pump in Broad Street, leading him to conclude that cholera was spread through contaminated water, which had not been known before. In the 20th century, companies started to collect large amounts of data about their transactions and customers; even before the official era of Big Data corporations such as banks and big retailers held huge amounts of data about customers and their interactions with the company.

But these were all relatively straightforward datasets, which were compiled by big institutions and mostly collecting numerical data. What has changed is the amount of data that is now being produced by individuals, particularly as a result of using social media. Every minute 456,000 tweets are sent, Instagram users post 46,740 photos, and there are 510,000 comments posted on Facebook and 400 hours of video are uploaded on YouTube. This content comes in a much wider variety of forms than the dry statistics and numbers which made up traditional datasets, including long-form text, images, audio and video.

Big Data therefore also encompasses the idea that data comes in a much wider variety of formats than it used to.

But what’s the point of all this data?

In 1965 Gordon Moore, the co-founder of Intel, made the observation that the number of transistors per square inch on integrated circuits had doubled every year since the integrated circuit was invented. This rather opaque statement (better known as Moore’s Law) basically means that the speed of computers is increasing at an exponential rate. The pace has now slowed down since Moore originally made this statement, but experts believe that data density will continue to double every 18 months until 2020-2025.

This is important because collecting large amounts of different types of data might be technologically impressive but doesn’t necessarily tell you anything interesting or useful. Getting insight out of the data requires analysing it, and analysing very large datasets takes a lot of processing power.

Of course for centuries, traditional statisticians have analysed large datasets to try and find correlations and patterns and most modern analytical techniques are based directly on statistical methods such as regression (which asks whether variables are correlated with each other).

But statisticians were often dealing with samples from a population, for example polling companies make inferences about what the whole country feels about an issue by analysing the responses a of few thousand people. The idea behind Big Data is that you no longer need to look at samples because you have access to data about the whole population, which can (as mentioned earlier) can come in a whole variety of different forms. To find all the patterns and correlations in these huge and complex datasets, you are going to need a machine. And to do it quickly you may well need a machine that can learn by itself.

Machine Learning – what is it?

Machine learning is a branch of Artificial Intelligence which aims to allow computers to learn automatically without human intervention. There are a couple of main types of machine learning.

Supervised machine learning algorithms are given a “training set” of data, for example if we wanted the algorithm to learn how to tell the difference between fraudulent and non-fraudulent credit card transactions apart we would give it a historical set of transactions which humans had pre-labelled as either being fraudulent and non-fraudulent. Then it would be let it loose on a dataset containing lots of other information about the customers and the transactions they made; what was purchased, where they were purchased, when they were purchased, what was bought with what etc. The algorithm would then try and work what sorts of things the legitimate transactions had in common and what distinguished them from the fraudulent ones.

Once the algorithm had established some rules to identify the differences, it would then be given a new set of transactions which didn’t have the answer pre-provided to see whether these rules work in real life. One of its rules might be that a transaction is probably fraudulent if someone suddenly buys a lot of ski equipment having never bought anything ski-related before, and having never even been to a country where skiing is popular. Once exposed to a real set of transactions it would flag these transactions as being suspicious, triggering a call to the credit card holder. But if most of these card holders confirmed that in fact, the transaction was legitimate that data would be fed back into the algorithm, and the computer might decide the that maybe this rule wasn’t that useful after all.

Unsupervised machine learning algorithms on the other hand, are used when the data is not pre-labelled. The aim of this kind of machine learning is not to figure out whether something is “right” or “wrong” but to explore the data and find hidden structures in it. Often this is used to group the data points into clusters or categories. For example, you could take all the comments on a business’ social media page as your dataset and then have the algorithm break them down into categories. You wouldn’t have any idea what categories the algorithm will come up with in advance, but the results may well help a business understand what kinds of things customers are saying about it without having to individually trawl through tens of thousands of comments.

Big Data defined

There are lots of short definitions of Big Data out there. But most of them revolve around the “three Vs” – volume, variety and velocity. Volume just means there’s a lot of data (as we looked at in the first section), Variety is the different types of data we now collect which we looked in the second section and Velocity refers to the speed of data processing that is necessary to get useful insights out of the data we have collected.

So does Big Data mean we can let computers make all the decisions?

Absolutely not!

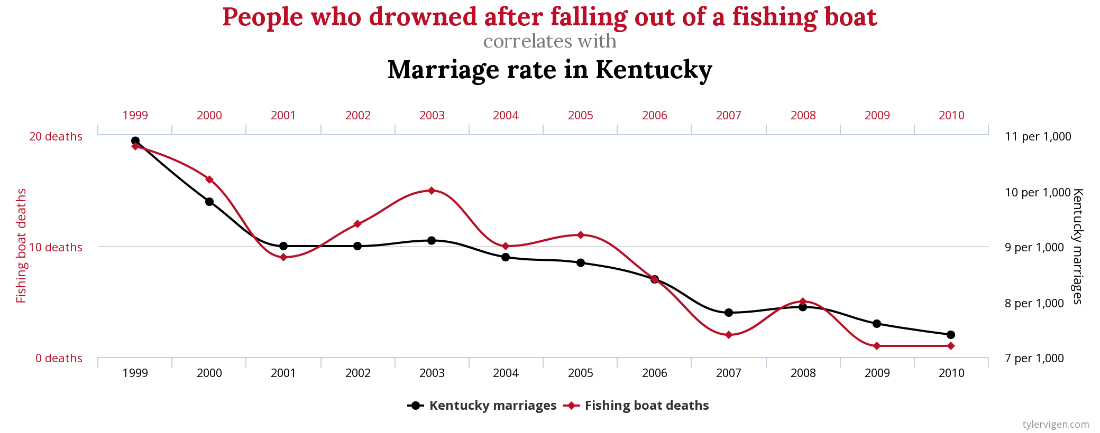

There are a number of very good reasons for this. Algorithms are very good at finding correlations between different variables – but that doesn’t mean that there is a causal link between them. For example between 1999 and 2010 there looks to be a very strong correlation between the number of people who drowned after falling out of a fishing boat and the marriage rate in the American state of Kentucky.

The odds of such a strong link between these two variables occurring purely by chance, are around 1 in 500,000 – a low enough figure for statisticians to assume that there must be a significant link between them. But of course falling out of a boat has nothing to do with marriage rates. An algorithm which is looking through literally millions of combinations of variables will inevitably find a small number of correlations which have occurred by accident. A human being could immediately spot these as being spurious but a machine by itself would not know this.

But even where a link between two variables makes sense, this doesn’t necessarily mean that this is a useful piece of information. Often statistical analysis simply reveals things that managers in a business already knew, a very obvious example would be a correlation between hot weather and rising ice cream sales. On the other hand, a relationship that is interesting and new might be uncovered, for example sales might be related to increases in government expenditure, but the business obviously has no power to change public spending. In both cases, consulting with managers who are the subject matter experts in their business, is essential to direct data analysis towards areas which can actually change the business for the better.

Machines are good at taking millions of “micro” decisions, e.g. whether a transaction is fraudulent or not, or whether an email should be classified as spam. But they cannot direct strategy in a business because they do not know about anything about the world outside the dataset it has been given to look at. They don’t necessarily know about the wider economic environment, about latest consumer trends, about your company’s values and culture, about changes in technology and all the other myriad pieces of information that humans pick up all the time without thinking about it.

Humans and machines working together

Big Data and data analytics are extraordinary technologies which help businesses and other organisations make better decisions - and those which fail to take advantage of these tools will find themselves falling behind. But in the end Big Data will never be big enough to replace the depth of knowledge and understanding that humans have about the world. In the end Businesses should neither be frightened that human managers will be completely replaced as decision-makers, nor believe that buying an expensive piece of new technology will solve all their decision-making problems without needing human expertise as well.

Get in touch

If you believe that evidence-based decision making is driven through collaboration between analysts and business managers then contact us to talk about how your business uses data, and find out whether there is a fit between your needs and the services Sensible Analytics can offer.